While cost-efficiency is one of the key drivers for businesses to opt for cloud solutions, the reality might fall short of expectations. In fact, a whopping 30% of cloud spend is wasted. However, this doesn’t mean that you should return to using an on-premise setup.

VR Company (NDA)

Experience the magic of an extraordinary solution that harnesses the potential of VR and AI to instantly convert vacant apartments into warm and inviting homes. Witness the seamless fusion of cutting-edge technology that revolutionizes the way we perceive and utilize empty spaces. Uncover our expertise in the case study and witness how DevOps can the remarkable capabilities of unlocking the true potential of VR and AI today!

TEAM

20-50 people

PERIOD OF COLLABORATION

January 2020 - present

CLIENT’S LOCATION

Tel Aviv, Israel

About the client

This case study features a budding virtual reality (VR) startup. The business is built around a mobile app that helps prospective tenants and homeowners instantly decide whether a house or an apartment meets their needs.

The solution allows users to virtually furnish a space in real-time based on their design preferences, lifestyle, and budget. By using a smartphone camera and making a couple of taps during a tour, the app will show how the chosen furniture and decor will look in the space. Besides this, users can purchase or rent home decor right from the app.

Among other tools, the client used:

Pulumi

AWS EKS Fargate

AWS Aurora RDS

AWS Lambda

Elastic

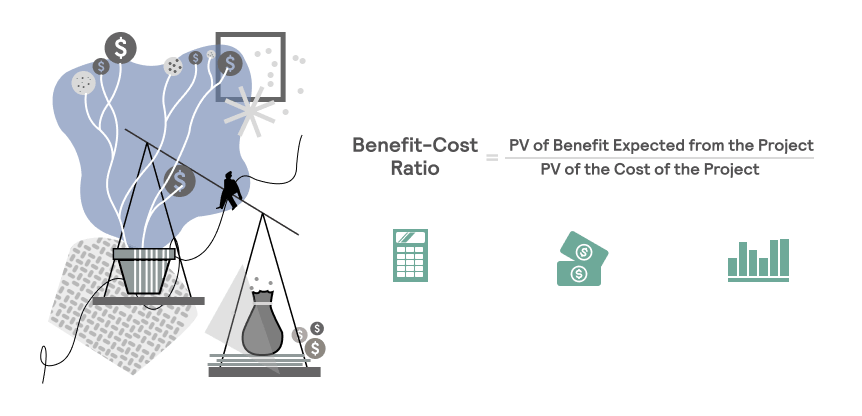

The Solution: Elastic Infrastructure with Optimal BCR

We set the ball rolling with an audit of the app’s infrastructure. Once we settled on the project scope, we assembled a software development team: a backend developer, frontend developers, and a QA expert on the client’s side, and a DevOps engineer on our side.

The infrastructure was set up so that:

- It has an optimal BCR (benefit-cost-ratio): the client pays only for the computation time they use.

- It’s easy to scale up if the client’s user base grows quickly and scale down to almost zero if the traffic volume drops.

This is how we achieved this

1

Move #1: AWS EKS Fargate

To deploy containers without extra computation resources going wasted, we chose AWS EKS Fargate. The solution provides computation capacity for containers on an on-demand basis. The technology comes with the following benefits:

- Automated resource management. There’s no need to provision, manage, and scale virtual machines for containers by the client — the system takes care of everything on its own.

- Cost-efficiency. The client pays only for the resources they use.

- Availability. AWS EKS Fargate is known for its high availability. So, the client can safely deploy anything they want, and it will be running no matter what.

Security. The providers of AWS EKS Fargate don’t take shortcuts when it comes to security. They use resource isolation, encryption of data in transit and at rest, and access control, among many other security features.

2

Move #2: AWS Aurora RDS

For the database, we selected AWS Aurora RDS. Built for the cloud, it’s a relational database service that fits together with MySQL and PostgreSQL. The solution stood out to us due to several advantages:

- High availability and reliability. It’s a fault-tolerant database service, so the client’s data is always available.

- Scalability. Aurora RDS is extremely elastic. Our client can easily scale up or down whenever necessary.

Performance. Aurora RDS provides low-latency response times, even under high workloads. This is critical in this case, as running many VR models requires high performance.

3

Move #3: AWS Lambda

Our client also has many additional microservices. They interact with the main application occasionally, staying idle most of the time. So, we were looking for a solution allowing the client not to pay for the computing resources when these microservices are idle.

Eventually, we settled on AWS Lambda, a serverless event-driven computing service. This decision meant:

- Reduced operational costs. AWS Lambda runs the code only in response to events. This means that the client only pays for the computation time they consume, resulting in significant cost savings.

Scalability. Lambda scales automatically as the workload changes. As a result, our client doesn't need to worry about capacity planning, and their services are always available.

Move #4: Pulumi

We implemented the infrastructure-as-code through Pulumi.

This allows the client to facilitate version control and automate the deployment of the infrastructure in a repeatable and predictable manner. In addition, Pulumi provides resource abstractions that simplify the creation and management of infrastructure resources. On top of this, unlike tools like Terraform, Pulumi has an extremely low learning curve: basically, it supports the programming languages the client’s team is working with.

Move #5: “Suspender”

To enable the client to easily scale the development and staging environment as necessary, we implemented the “suspender.” This feature allows developers to suspend (scale down to almost zero) the environment simply by clicking on a button. Similarly, they can easily scale up. In addition, these environments can be auto-scaled up if there are two or more requests on the load balancer.

As a result, the client doesn’t have idle environments, which greatly saves costs.

Since the project is in development, the infrastructure capacity is minimal. Still, if necessary, it can be easily configured for considerably higher daily traffic volumes.

Let's arrange a free consultation

Just fill the form below and we will contaсt you via email to arrange a free call to discuss your project and estimates.

Next Steps: New Strategies for Task Tracking and Management

The project is still underway: the client continues to improve their infrastructure-as-code, and their requests keep coming. Currently, we are focused on developing a strong strategy for tracking and managing these requests.