While listening to the episode of Kubernetes podcast devoted to etcd I noted that etcd uses Raft consensus protocol under the hood to manage a highly-available replicated log. Since I've heard of Raft several times before (Consul, Zookeeper), I decided to take a deeper look into it and finally figure out its purpose.

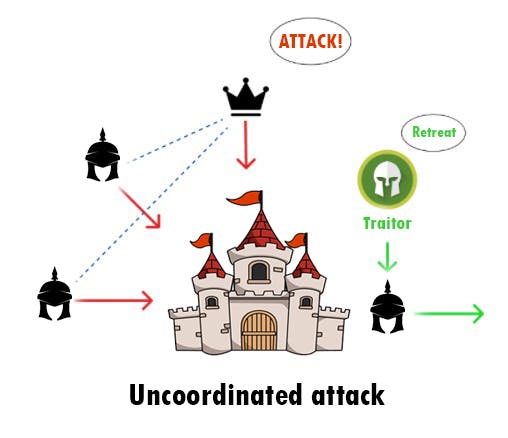

Turns out, there is a perfect explanation of why we need a consensus protocol in the first place and it's called Byzantine fault . The Wiki page explains it really well, but let me give you a short version here. Imagine the Byzantine army besieging a city, deciding whether to storm the city or keep the siege. Army's 9 generals decide to get ready for the storm but not to attack until they all agree on a common decision. The partial attack will allow the enemy to overpower Byzantines and defeat them, which is worse than a full-scale attack (Byzantine wins) or inaction (Byzantine does not lose). The state of affairs is getting more difficult as there is an unknown amount of treacherous generals which will try to achieve the halfhearted attack. The situation imposes a question: in which way should generals communicate and come to a common decision and with what number of treacherous generals is that even possible?

Let's take that question into the technology field. Having a distributed cluster of databases, how do we ensure consistency across the cluster, detect faulty nodes, and minimize the impact of a node being down? This and some additional questions are answered by Raft.