The practices and tips outlined below can help startups build a system that can handle the complexities and demands of dynamic models.

Streamline data management

The data you feed into ML models impact the models’ accuracy and fairness. You should establish data collection, preprocessing, and storage standards to ensure that you use the highest quality data to train your ML models and include the following essential practices:

- Establish standardized data collection protocols to ensure consistency, fairness, and relevancy for accurate modeling.

- Implement data validation checks during data collection to detect errors early and reduce debugging time.

- Incorporate data preprocessing to make the data suitable for ML models. Normalizing data ensures that all your data use a common scale, while encoding converts it to numerical data understandable by ML models.

- Use a central unified repository for ML features and datasets to easily manage and retrieve data for specific tasks and applications. This makes it easier to log access attempts and data changes.

- Maintain scripts for creating and splitting datasets so you can easily recreate models in different environments for testing and development purposes.

By placing data management at the heart of your MLOps strategy, you can increase the likelihood of creating fair and accurate models that drive value for your business.

Enforce model governance

You should enforce rules for registering, validating, and approving models. Model governance can differ based on your industry, regulatory landscape, and ML use cases. However, some practices are uniform across most organizations:

- Define features to ensure employees in different departments consistently understand what each feature represents.

- Maintain metadata and annotation policies to help teams monitor data, code, and parameters so that teams working on the same tasks can collaborate.

- Apply quality assurance standards to ensure that ML models meet your standards (including sufficient accuracy, explainability, and security).

- Create checking, releasing, and reporting guidelines that control risk and support compliance with government regulations and data privacy standards.

- Implement change management procedures to ensure new data and algorithm updates don’t introduce risk or reduce the ML model’s performance.

As a cornerstone of an MLOps infrastructure, model governance promotes consistency, quality, and compliance throughout the model lifecycle.

Establish performance metrics

Incorporating MLOps metrics helps teams understand whether models operate as expected or drift from their optimal performance. Different models have different key performance indicators (KPI), but here are the most common ones:

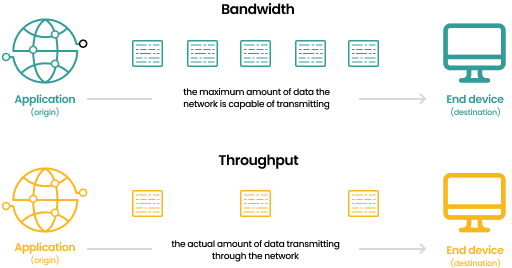

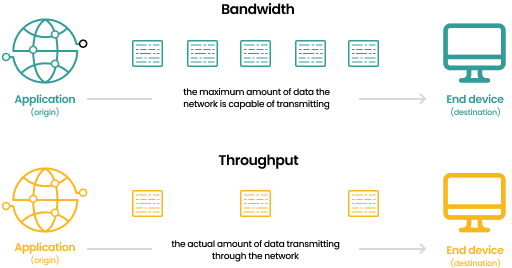

- Throughput: Number of decisions (predictions) that a machine learning model can handle per unit of time

- Accuracy: Percentage of correct decisions made by the model (a widely used metric for binary classification problems)

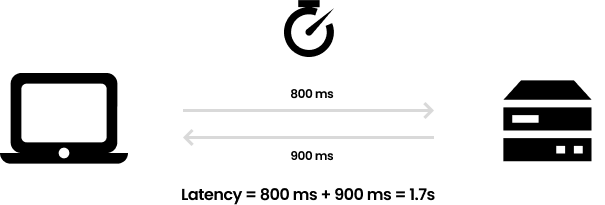

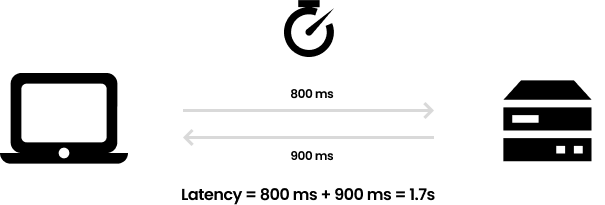

- Latency: The length of time the model needs to respond to a request

- Resource utilization: How much CPU, GPU, and memory the system needs to complete tasks

- Error rates: How often a model fails to complete a task or returns an incorrect or invalid result (a metric typically used for regression problems)

Regularly reviewing these metrics helps you identify and resolve performance issues early on. They’re helpful not only for maintaining system performance but also for predicting cost. Additionally, using dashboard tools, like Grafana or Prometheus, helps various stakeholders visualize and understand MLOps performance.

It’s also a good idea to collect relevant business KPIs to measure the impact of the ML system on your business (for example, click-through rate and revenue uplift before and after deploying a model).

Implement version control

Robust version control for models, data, and configurations makes changes to model development traceable, accountable, and reproducible. It basically gives you a way to go back to any point in the development process and understand what happened.

Maintaining a traceable log of ML metadata (assets and artifacts produced during engineering) is also key to improving your past work. To achieve this, we recommend the following:

- Utilize version control tools like DVC (Data Version Control) to track datasets, revert changes, and reproduce workflows when training and deploying ML models.

- Use experiment tracking software such as MLflow or TensorBoard to compare metrics and hyperparameters of different model versions.

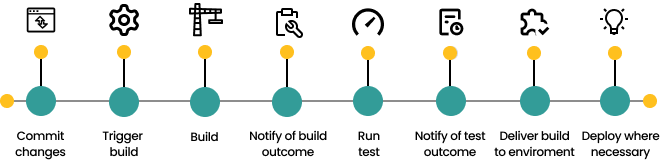

Automate CI/CD pipelines

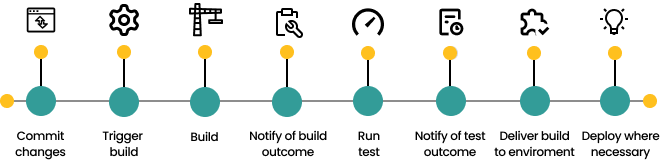

Manual processes are harder to scale than automated ones. They’re also more prone to mistakes. In contrast, many MLOps platforms and tools, like Kubeflow and the MLflow mentioned above, let you define and automate repeatable steps and processes in your CI/CD pipeline to minimize the possibility of errors.

Here are some practices to adopt for a continuous ML pipeline:

- Integrate notebook environments with version control tools to allow data scientists and collaborators to write and automate modular, reusable, and testable source code.

- Implement automated checkups to reduce the time between model development, testing, and deployment into production.

- Set up automated alerts for model drift so you can respond quickly to degradations in accuracy and other performance metrics.

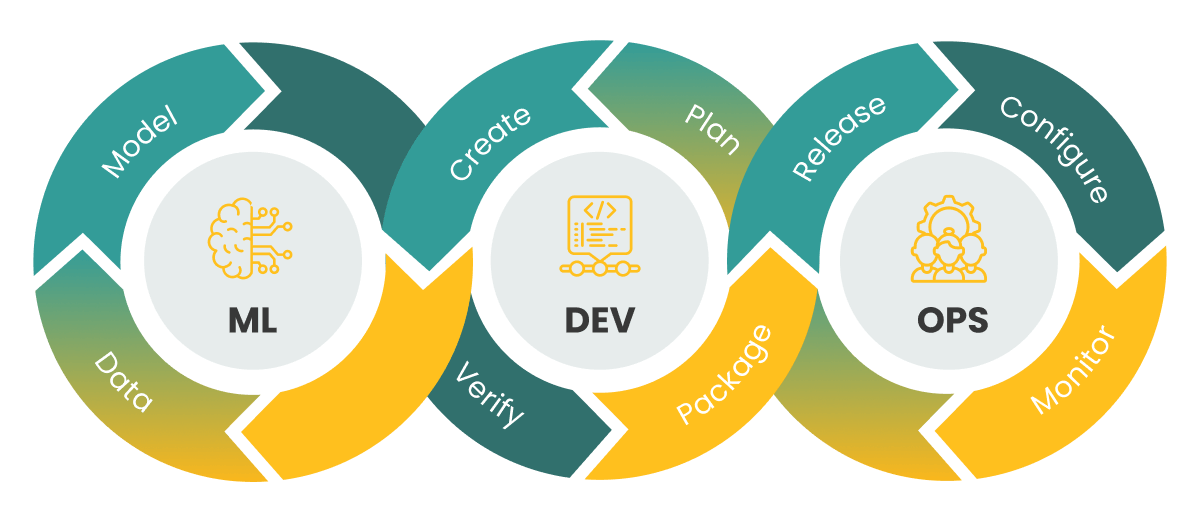

Think of automated CI/CD for MLOps this way: developers use automated CI/CD to merge code changes and automate delivery, whereas ML teams can use it to continuously integrate new data, retrain models, and deploy updates.

Develop a collaboration strategy

MLOps, like DevOps, relies on collaboration between teams with different areas of expertise — data scientists, engineers, and in the case of MLOps, the operations department. The goal is to foster a culture where these teams can effectively communicate and work together. Communication may take several different forms:

- Regular sync-ups between departments help align understanding of the objectives and current tasks.

- Comprehensive documentation empowers everyone to follow established processes and definitions.

- Collaboration tools like Trello or Jira make coordinating employees and outsourcing teams easier.

These practices contribute to a robust MLOps framework, improving your AI and ML capabilities so you can deploy models more cost-effectively.